It seems like time to post some images from the in-progress precoputed lighting tech that Ignacio is working on. See here for a description of the basics behind what we are doing for lighting. The lightmaps are now 16-bit and these shots use a simple exponential tone mapper.

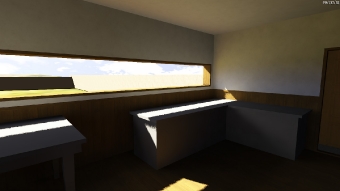

First, I've taken shots from 3 positions inside a house, with four different lighting settings. For all four settings, we are computing only the first lighting bounce. The parameters are:

- 32x32 cube maps, adaptive sampling, quality = 0.5. Time to compute: 197 seconds.

- 32x32 cube maps, non-adaptive sampling. Time to compute: 648 seconds.

- 64x64 cube maps, non-adaptive sampling. Time to compute: 738 seconds.

- 128x128 cube maps, non-adaptive sampling. Time to compute: 1570 seconds.

(Click for full size).

Front Door:

32x32 adaptive

32x32

64x64

128x128

32x32 adaptive

32x32

64x64

128x128

32x32 adaptive

32x32

64x64

128x128

The hardware used to compute these timings is an Intel Core i7-860 with an ATI Radeon 5870 (most of the process is GPU-bound).

The non-adaptive sampling renders one cube map for each shadow map texel, whereas the adaptive sampling uses heuristics to place samples and then smoothes the map between them. At higher quality settings, adaptive sampling provides more-accurate results, but there always seem to be artifacts, so for the time being we are using it as a fast preview mode. If at some point the quality becomes shippable, then we would switch to adaptive sampling for everything.

We also have a sub-texel sampling mode that improves quality, but I didn't play with that during this run.

This second set of images shows progressive bounces of the lighting with 64x64 cube maps, non-adaptive sampling. (The first one is the same 64x64 image as above). The times are: 1 bounce, 738 seconds; 2 bounces, 1438 seconds; 3 bounces, 2481 seconds. The precompute time is basically linear in the number of bounces, as one might expect (the algorithm is basically just doing the same thing n times.)

1 bounce

2 bounces

3 bounces

It might also be interesting to show the time it takes when using adaptive sampling with higher quality levels (0.75 or 1) with higher resolution cube maps. I would expect that to get pretty close to the quality required for shipping.

It may also be surprising that the difference in the timings between 32×32 and 64×64 cube-maps is so small. I suspect the rendering time is pretty much the same, because the bottleneck is on the geometry pipe, and that the difference comes primarily from the cost of transferring and integrating 4 times more texels.

Good job. Glad I get to learn stuff from your posts. Keep up the good work, and keep me posted!!

Nice! But I hope you blur those hard shadow edges in the final game.

Interesting as always. Thanks for sharing.

I was wondering, why do you use your own tool for the pre-rendering of the lighting?

Why not use a professional render plugin for something like 3DSMAX like Maxwell and bake the textures with it? I would think the quality would be much higher.

Or is it the fun part of creating your own implementation? Or cost?

We did play around with pre-rendering some lighting in Max, early on, to establish quality targets, but I don’t feel like that is a feasible way to go as a permanent process. Why would you think the quality would be higher? Serious question.

There are a lot of reasons why we do it ourselves. I’ll try to remember as many as I can here:

* When baking the lightmaps, the baking process is aware of the full scene, not just the local building or whatever. 3D Studio MAX would choke if you tried to load the full scene of an open-world game into it. It just can’t do it. So if you try to do this stuff in an editor, you have to somehow do a lot of extra work to build lightmaps that don’t have discontinuities or blatant wrongness in junction areas.

* Since a lot of work has to be done one way or another, it’s better to do it in a way that’s not tied to a 3rd-party tool that you have to pay for every year, that is flaky and crashes all the time, and is slow, and is barely even being developed at this point, because the company that now owns it has a monopoly on 3D modeling tools (they also bought Maya and SoftImage). Internally, Max is a pile of shit; this is not a controversial statement, and you will find general agreement on that fact among those who have ever made a plugin for it. So as long as we have to do a substantial amount of work, better to do that work in a way that creates an asset that the company can re-use in the long term, on the next game or whatever, without creating a maintenance and production liability.

* Workflow-wise, it is just much nicer to have this stuff in the in-game editor. You can be playing the game, decide you don’t like something, hit a key to pop open the editor to that exact game location, move an object, then run a low-quality rebake and see the results very quickly, without ever leaving the game.

* Because this is our own code, we can bake lightmaps on whatever platform we want with minimal effort (aside from the effort of porting to that platform, which in general does not take long). Next week we could be baking lightmaps on the PS3 if we decided that was a really good idea.

* If we want it for rendering purposes, it is pretty easy to augment our lightmaps with directional information — either spherical harmonics or a spatial vector basis the way the Source Engine does things. I have seen hacks to do this kind of thing in rendering packages, but they are definitely difficult to do and don’t allow for a lot of nuance. Maybe this kind of option has been built into something like Maxwell very recently, but I would be surprised at that, because it is strictly a realtime app kind of thing to want.

* We are going to be doing some things that involve generating lightmaps that add to each other, for e.g. apertures that open and close. Doing this in Max is another world database navigation kind of problem and has all the issues discussed above.

* Because this is our own code, we can give it out to other developers who also want a solution like this.

That’s all I am remembering for now. There are certainly other reasons too.

Very nice. I’m assuming you’re aiming for 3 bounces for the shipping version? It usually seems to be the sweet spot.

I actually don’t know how many bounces we’ll do. It might even vary from place to place. We’ll see!

@nine: This algorithm will produce soft shadows for local lights (spotlights and point lights), but those scenes are lit by sunlight, which is directional and doesn’t produce penumbrae. The dithering on the hard shadow edge is part of the visual style, I would assume.

It rather seems to be an artifact of unfiltered PCS-style shadows.