A little problem that we had when we started to create trees and vegetation is that the standard mipmap generation algorithms produced surprisingly bad results on alpha tested textures. As the trees moved farther away from the camera, the leafs faded out becoming almost transparent.

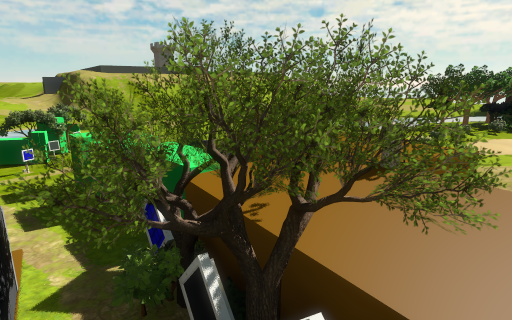

Here's an example. The following tree looked OK close to the camera:

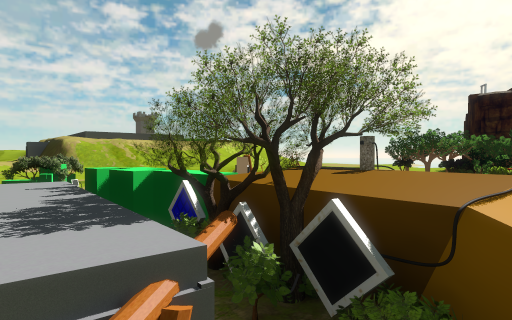

but as we moved away, the leafs started to fade and thin out:

until they almost disappeared:

I had never encountered this problem before, neither had I heard much about it, but after a few google searches I found out that artists are often frustrated by it and usually work around the issue using various hacks. These are some of the proposed solutions that are usually suggested:

- Manually adjusting contrast and sharpening the alpha channel of each mipmap in Photoshop.

- Scaling the alpha in the shader based on distance or on an lod factor estimated using texture gradients.

- Limiting the number of mipmaps, so that the lowest ones aren't used by the game.

These solutions may work around the problem in one way or another, but none of them is entirely correct and in some cases add a significant overhead.

In order to address the problem it's important to understand why the geometry fades out in the distance. That is because when computing alpha mipmaps using the standard algorithms, each mipmap has a different alpha test coverage. That is, the proportion of pixels that pass the alpha test changes, in most cases going down and causing the texture to become more transparent.

A simple solution to the problem is to find a scale factor that preserves the original alpha test coverage as best as possible. We define the coverage of the first mipmap as follows:

coverage = Sum(a_i > A_r) / N

where A_r is the alpha test value used in your application, a_i are the alpha values of the mipmap, and N is the number of texels in the mipmap. Then, for the following mipmaps you want to find a scale factor that causes the resulting coverage to stay the same:

Sum(scale * a_i > A_r) / N == coverage

However, finding this scale directly is tricky because it's a discrete problem, in general, there's no exact solution, and the range of scale is unbounded. Instead, what you can do is to find a new alpha reference value a_r that produces the desired coverage:

Sum(a_i > a_r) / N = coverage

This is much easier to solve, because a_r is bounded between 0 and 1. So, it's possible to use a simple bisection search to find the best solution. Once you know a_r the scale is simply:

scale = A_r / a_r

An implementation of this algorithm is publicly available in NVTT. The relevant code can be found in the following methods of the FloatImage class:

float FloatImage::alphaTestCoverage(float alphaRef, int alphaChannel) const;

void FloatImage::scaleAlphaToCoverage(float desiredCoverage, float alphaRef, int alphaChannel);

And here's a simple example of how this feature can be used through NVTT's public API:

// Output first mipmap.

context.compress(image, compressionOptions, outputOptions);

// Estimate original coverage.

const float coverage = image.alphaTestCoverage(A_r);

// Build mipmaps and scale alpha to preserve original coverage.

while (image.buildNextMipmap(nvtt::MipmapFilter_Kaiser))

{

image.scaleAlphaToCoverage(coverage, A_r);

context.compress(tmpImage, compressionOptions, outputOptions);

}

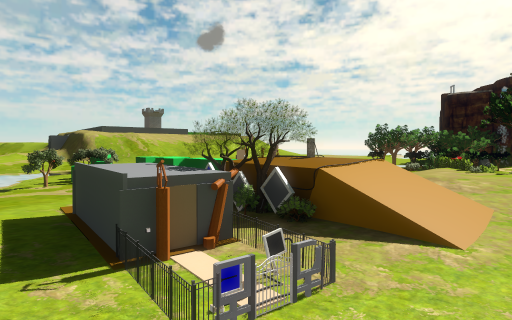

As seen in the following screenshot, this solves the problem entirely:

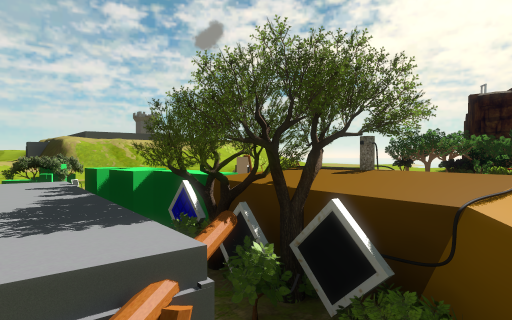

even when the trees are far away from the camera:

Note that this problem does not only show up when using alpha testing, but also when using alpha to coverage (as in these screenshots) or alpha blending in general. In those cases you don't have a specific alpha reference value, but this algorithm still works fine if you choose a value that is close to 1, since essentially what you want is to preserve the percentage of texels that are nearly opaque.

You lost me about half way through that post… but the screenshots are very nice indeed. I think its fair to leave the programming to others, but as long as you keep dropping pretty pictures, I’m gonna keep reading.

why is there one small grey scud/cumulus cloud in the sky?

Useful algorithm, thanks for sharing.

Have you considered distance fields? http://www.valvesoftware.com/publications/2007/SIGGRAPH2007_AlphaTestedMagnification.pdf

A friend of mine was playing with them and the results were remarkable. They’re great for magnification, but they’d also maintain a remarkable amount of detail as you reduce the mip level.

The other thing to use is anisotropic filtering. I found this made a huge difference to alpha-tested transparencies when viewed from a shallow angle.

Looks quite nice.

However, a simple and correct solution when using alpha blending is to use premultiplied alpha. Linear interpolation works fine on premultiplied values, thus generating mipmaps using a box filter is ok. When using alpha to coverage you could un-premultiply the color values (i.e. divide by alpha) in the shader to get the desired result.

this is really useful information, I’ve always hated this problem. if only I had the programming skills to actually open up the hood and change this kind of thing in the engine I’m using.

more api developers need to read this blog, you guys have graciously shared a lot of solutions to common (and apparently avoidable) problems.

Reminds me of this:

http://www.humus.name/index.php?page=3D&ID=61

@Won As I note at the end of the post, this technique works with alpha to coverage, which is in fact what we use in the game when multisampling is available.

@Georg Premultiplied alpha does not really solve this problem, it does not prevent the alpha test coverage to change. However, you can still use this technique with premultiplied alpha, you just have to premultiply the colors after the mipmap generation.

@Ben Distance Fields could solve the problem, but they are more expensive to evaluate at runtime, while this technique has no extra cost. Something that might be interesting to investigate is to generate the mipmaps using distance fields, I believe that would preserve the original coverage better.

Actually the distance fields don’t have to be any more expensive at runtime as its only a different way to encode the data itself. They can be alpha tested just like normal alphas. The only difference is you will usually want to set the default clip threshold to 0.5. I have a simple shader that can convert any hard edged mask into a signed distance field with the edge at 0.5. This isn’t completely solve the coverage problem, but it does seem to look nicer. And one added benefit is animated frames can also morph edges a bit, up to the gradient width.

Yeah. The Humus article talks about contrast-boosting to maintain edges for alpha textures, but for the easier case of magnification rather than minification.

@Won Ah, I overlooked that. It seems that’s a good solution to keep edges sharp after magnification, but yeah, that’s an entirely different problem.

I don’t understand why this is a problem for regular alpha blending.

It depends on what you want to achieve. In the case of vegetation you have some areas of the texture that are fully opaque, others fully transparent and a short smooth transitions between them. As the texture is minimized, you don’t want the small/thin features to become more transparent. A tree isn’t transparent no matter how far it is! The results are much better if the small features either disappear entirely or cluster together and remain solid while still having smooth edges around them.

That’s what the proposed algorithm achieves. However, you can obtain similar results in many different ways. For example, you can apply a fractional dilation or erosion filter until you achieve the desired coverage, or as mentioned before you could use distance fields to compute the mipmaps and then transform them to an alpha mask finding the isoline that preserves best the coverage.

The high concept makes sense in the abstract, sure, but it doesn’t seem very convincing. Yes, a tree should stay opaque and if you make all the leaves 50% transparent it won’t stay 100% opaque. But making the leaves go away entirely has got to be worse; at least the mipmapped alpha blended tree is 90-95% opaque, instead of 0% opaque.

And at least the mipmapped alpha represents coverage and produces something resembling anti-aliasing, whereas without alpha-to-coverage and a decent MSAA your alpha-tested foliage looks like crap. So I’d argue that while alpha-blending has some defects in full opacity, they’re far less objectionable than any other defect on typical hardware in non-Siggraph contexts.

I mean, the proof is in the pudding. If it looks worse, it looks worse. But I can’t tell if you actually tried this or were just tossing off a guestimate-y aside.

I think the results speak for themselves:

With alpha coverage adjustment:

http://img251.imageshack.us/img251/1540/blendcoverage.png

Without:

http://img227.imageshack.us/img227/1391/blendnocoverage.png

@Ignacio I did not mean to imply that premultiplied alpha would solve the problem for alpha test (it only does if your alpha threshold is 0.5). However, as I mentioned premultiplied works fine for both alpha blending and alpha to coverage. It simply is the correct way of linearly filtering textures with alpha.

The only flaw I see there is the shadows, which are another alpha tested case, and I do I agree about the improvement for the alpha test case. The farther away stuff looks different, but it’s not clear to me which one is more right or wrong; this doesn’t seem to be testing the case I thought we were talking about, looking through a tree or other multiple layers of foilage that ought to be opaque.

Also relevant is the approach to foliage in Pure: http://www2.disney.co.uk/cms_res/blackrockstudio/pdf/Foliage_Rendering_in_Pure.pdf

although they don’t talk about shadows at all. They don’t do alpha testing or regular blending; they do some hacky blending that weights between adding up all the alpha (which will go opaque with multiple non-opaque layers) and taking the max of all the alpha (which is only opaque if some alpha is opaque). The whole thing is super hacky, but then I’ve yet to see good-looking foliage that didn’t smack of a hack.

@Sean it’s not trivial to test this on trees since in our case, they have many self intersections and don’t look good when rendered with alpha blending. What I was trying to show is that without coverage preservation the leaves become almost transparent when minimized, while with coverage preservation they stay significantly more opaque. I don’t think that adding multiple layers of foliage would make that any different.

The shadows are a separate issue, but that’s not what I was trying to show…

I’m moderately satisfied with how foliage looks on PC, but on consoles it’s hard to afford MSAA, so it might make sense to use hacks like that. It does seem to look quite good, but I’ll have to try the game to convince myself.

Thanks for sharing, this its looking really good. Do you know if those trees are being generated by something like SpeedTree or just custom modeling? That process might be an interesting blog post.

“The high concept makes sense in the abstract, sure, but it doesn’t seem very convincing. Yes, a tree should stay opaque and if you make all the leaves 50% transparent it won’t stay 100% opaque. But making the leaves go away entirely has got to be worse; at least the mipmapped alpha blended tree is 90-95% opaque, instead of 0% opaque.”

A technique I’ve used in the past is to simply randomly choose one of the 4 parent pixels instead of blending them together. Even *that* horrible hack often looks better than regular mip-mapped alpha blending on things like leaves and chain link fences, etc. (in fact lots of artists use this hack by disabling mip-mapping on alpha-tested textures). But IC’s method is surely better than those.

ps. premultiplied alpha. lol.

Like I said, they look more transparent, but I don’t know what’s actually right. E.g. we’d really need a 256-sample SSAA that we downsample and compare against to be sure — and no, I’m not saying you should go do that work, I’m just saying it’s one thing to say one or the other is ‘aesthetically superior’ in your context, and another to say it’s actually ‘more right’.

If you can send me your tree model I’d be curious to play with it a little.

Well, wait, no. Because it doesn’t matter what the results of 256-sample SSAA would be, because we are not trying to duplicate the results of an abstract high-res rendering process; we are trying to use 3D rendering to generate a specific effect.

That effect is trees that don’t look like cellophane when you are far away from them — as they do not in the real world. This does that. Therefore, it’s “right”.

@ Sean Barett: can you somehow blackmail casey and jeff into doing more podcasts ? I hear tell that Casey’s got quite the pastry fetish, and if that news were made public, well, he could lose some prestige.

I’m wondering, what’s the poly count on the trees?

Jon, your trees in the game don’t look anything like this screenshot I’m discussing, and you’re not currently doing alpha blended trees in your game, so I don’t think your comment has much to do with what I’m talking about in context.

In general (though not always) we want rendering to approximate the same effect that would obtain in the real world. Now it is true that these are trees which are supposed to be simulacra of such things as they exist in the real world, and to the degree that they are inaccurate from real trees you might nevertheless want them to have certain properties as you got further away from them that wouldn’t be accurate from a standpoint of The Rendering Equation, but which would be true to the thing you had intended to simulate. (This is what I meant by “aesthetically superior in your context”). But I don’t think that’s even what’s going on here, because clearly if they are opaque up close according to the rendering equation, they are opaque far away according to the rendering equation. The problem is that alpha testing is a hack that’s very far from the results of the rendering equation, so the (naive) alpha testing results deviate as discussed.

And while I am fine with the idea that the proposed solution is aesthetically superior in your context — rock on! — Ignacio originally expressed the issue as “Note that this problem does not only show up when using alpha testing, but also when using alpha to coverage (as in these screenshots) or alpha blending in general”, implying that it is perhaps something of interest outside the context of rendering the Witness. Thus we were in fact talking about alpha-blended trees, and this generality, not the Witness in particular, so this is further part of why I am doubtful of your reply.

So, sticking to correctness: if the original art was actually opaque, and then you get farther away from it, it should look the same as when you were close to it but downsampled — that downsampling will preserve opacity wherever it was opaque in a large enough region. High-pixel-count SSAA is equivalent to downsampling the “closer” image. Thus such a test would be both mathematically correct *and* addresses the opacity issue with which he was concerned.

Anyway, given that the opacity-preservation would be preserved by downsampling the original composited image (this is just “blend does not distribute over lerp” again), I stand by my claim: I can’t tell in Ignacio’s example for alpha blending which of the two images actually reproduces the visibility effect you would see at that distance; I’d need to see a “correct” reference to tell that. But that wouldn’t even necessarily address the actual question; since the actual problematic effect is one of an interaction of multiple layers (with alpha blending an alpha value representing coverage, normal mipmapping clearly does do the right thing for single layers), and the example he tested doesn’t seem to demonstrate multiple overlapping with some significant depth complexity, I don’t even know what’s right. I just see one lighter than the other but neither looks more right. And I can’t necessarily tell whether that amount of lightness is appropriate algorithmically; e.g. is two layers of 90% opacity really visibility non-opaque? Three? And what are the actual numbers here?

Which is why, you know, it would be a lot simpler if I could just play with the tree model and figure it out for myself.

[QUOTE]Jon, your trees in the game don’t look anything like this screenshot I’m discussing, and you’re not currently doing alpha blended trees in your game, so I don’t think your comment has much to do with what I’m talking about in context.[/QUOTE]

I think this is the problem… Everyone else is talking in context to the game, you are talking about something else entirely.

Alpha map mip maps : After a certain level, there significantly less 100% opaque pixel values anymore because it is averaged with the neighbouring 0% pixels. So after a bit you end up with a mip map that is mostly 50%. That shouldn’t happen, ignacio found a pretty cool solution and now the leaves don’t get semi transparent when you move away.

Why about jumping to a complete procedural engine?

Hi Ignacio, great article. Can I ask what version of NVTT the algorithm implementations can be found in? I’ve gone back as far as Dec 2008 but can’t find them in the FloatImage.cpp or .h. I’d be keen to check out the routines you mention to see if they were viable for my current project. I’ve encountered this problem before but I overcame it by using nearest point sampling during the downsampling for the mipmap generation. It’s fast but obviously a solution that accepts linear filtering would look a lot nicer. Cheers, Al.

Alan, the implementation is only available in trunk, it still haven’t gotten around to packaging a new official release yet. You can browse its code here:

http://code.google.com/p/nvidia-texture-tools/source/browse/trunk/

The FloatImage class is defined in src/nvimage/FloatImage.cpp, here are the links to the specific functions referenced above:

http://code.google.com/p/nvidia-texture-tools/source/browse/trunk/src/nvimage/FloatImage.cpp#988

http://code.google.com/p/nvidia-texture-tools/source/browse/trunk/src/nvimage/FloatImage.cpp#1005

Ignacio, thank you for sharing this, much appreciated. The solution looks excellent for creating mipmap textures offline that maintain great alpha test coverage. I’d certainly use it for foliage and other such paraphernalia. I’ve been hoping for a viable real-time solution to appear that I could use on PlayStation 3. Thanks again for releasing the code, and keep up the great work! Al.

How are you mipmapping normal maps? The solutions I’ve found online involve averaging x,y,z values and re-normalizing, but these don’t work in extreme cases ( averaging (1,0,0) and (-1,0,0) gives the zero vector) and seem not to take the specific properties of normal maps into account.

Could you PLEASE, compile your code example into executable form, since i have tried to download NVTT library and some parameters are different and i don’t know how to bind image (Surface) to load from input file. What is a “tmpImage” and how is it related to “image” variable?

Can you show whole source?

Thank you for your time.

I’m not a programmer, but I’ve noticed forcing alpha to coverage combined with FXAA on my NV config pannel gives very smooth and stable AA for alpha tested textures, better than super sampling even.

To be more specific, msaa on alpha gives a dithering pattern, and running FXAA hides it. Sounds hacky but it gives surprisingly robust results.