Ignacio has implemented the first version of the handling for light sources that change (doors that open or close, lights that can be switched on or off, etc). The idea is that we just precompute different lightmaps for each of these cases and blend between them at runtime. In the future (not the future of this game, but the general computer graphics future) when we have realtime global illumination, this would not be necessary; but for now this is much faster to compute and much easier to implement. The drawback is that we have to think about the different cases in advance (things can't be too dynamic) and the amount of lightmap storage space grows rapidly as the number of variables grows.

We don't yet smoothly interpolate between the maps in the shader; we just switch the maps outright. Interpolation is coming soon. But in screenshots you can't see changing conditions anyway, so it's time for some screenshots.

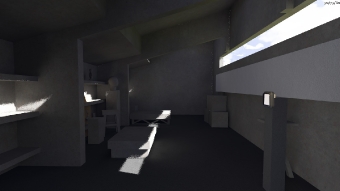

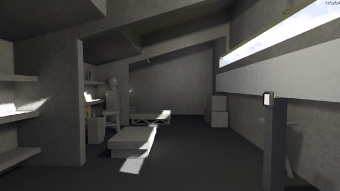

First is the house with the door closed, then with the door open. After that comes the sculpture room, with two light sources toggled in various ways (see the captions on each picture). For now you'll have to forgive the light leaking in the corners and the shadow speckles on the walls; they are due to issues with the dynamic shadow system (which is not really related to what is being shown here) and those issues have yet to be resolved!

Looks great.

Would you mind telling us a little bit more about the general concept behind The Witness? I don´t want to be spoiled, but light should play a role similar to Braid´s time in a gameplay perspective?

Very interesting. As an uninitiated to the whole technical side of things, I was wondering why you, too, were using that dither effect at the threshold between light and dark. Is it to smooth out the edges? I’ve seen this effect in recent games as well like Bad Company 2 (DICE) or Final Fantasy XIII (Square Enix). Is not dithering a thing of the past, as it looks so outdated? (Reminds me of old ink jet printers.)

I wonder if there’s a simple, as in non brute force, way to detect gradients within light-maps?

If so, you could drastically decrease the storage requirements by storing coordinates with color gradients and only store a block of pixels when there’s too much variety within it.

Just my 2cts.

Could you make it so that the images, when clicked on (or maybe middle-clicked) open up in a new window? It’s easier to compare images when you can flick through the tabs and only see the changes. With the overlay being slightly larger than my screen, I have to scroll down to click “next”, and that makes comparisons difficult.

These lighting ideas are pretty intelligent. I can’t wait to see them in motion.

Hey, congrats on pushing the pre-computed envelope! I’m a source engine modder, so battling with the limitations of pre-computed lighting is one of my major headaches. You’ve effectively circumnavigated one major limitation – a certain achievement. You’ve left me wishing valve would come up with something like this for source. Not to take away from what you’ve done – truly, I’m impressed – but, this seems like it puts a restriction of extra caution in regards to scene lighting complexity. The sculpture room as you show it requires 4 times as much lighting data as the same room with no “options”. Simply adding a door to get into that room brings the total to 8 times as much data. Obviously you realize the situation, as you called out in your post, but it has me wondering how game play decisions might be affected by size constraint concerns.

Another thing I’m wondering – Is global illumination really such a far off dream that the only sensible solution is to push pre-computed lighting to new lengths? I thought some games were already using it, even if in some simplified fashion. Is it perhaps just too much effort to implement with a small team?

The problem is that there is a practical limit to the resolution of the shadow maps, and it is lower than one would like. If I took out that dithering then you would see big chunky pixels. At the same time, though, the dithering causes other visual problems, and is really slow. So, in general, it’s an issue.

vecima: Because light is additive, we could refactor the system so that it uses only one lightmap per variable, instead of being 2-to-the-n. Basically you can just subtract out the lightmap with everything turned off / closed from all the other lightmaps; then at runtime, you can add in the other lightmaps as you turn on lights or open windows. The drawback then is that the shader becomes slower if you have more than 2 sources (because it is blending multiple lightmaps), but this is constrained per-location so it is kind of controllable.

In the 2-to-the-n case, you can still control that somewhat by populating the space sparsely… if there are theoretically 8 lightmaps, maybe you only have 5 or 4, as in general some light sources are going to dominate other ones.

I don’t know of any games that are doing realtime high-quality global illumination. It is generally considered as beyond the current tech horizon. There are some SDKs you can license

Sander: The question is then how you are supposed to render that quickly on modern graphics hardware. I suppose you could render a mesh to an alternate render target and then blend with that at runtime… but that is substantially slower, and it is questionable whether the mesh would provide enough compression (though possibly it would be a lot, for some lighting situations.) I could see it as a space for experimentation, though.

How do you specify a range of geometry affected by alternate lightmap?

With a lot of tiny lightmapped things around it seems tricky.

And I wonder, where do you cut it to not introduce seams, since GI is inherently not local?

(Have not tried myself, but when you open a door lighting should subtly change just everywhere).

I have a somewhat similar lightmap switching. However, I do not even think of interpolating them since I encode dominant direction vector as well as hdr color per pixel, and it takes 64 bits per pixel spread over 3 textures. Quite heavy, so everytime we need to change light something blows to hide the change :)

Anton: Right now the range is somewhat hardcoded, based on the intersection of the bounding volumes, but we will add the option to expand it if necessary. You can currently do that editing a text file, but that’s obviously not very convenient.

Transitions are not really correct, but since the results only affect the indirect illumination, I think they are plausible.

Something you could do is to use dynamic textures and do the interpolation in a separate pass before hand. The problem then is that that doesn’t allow you to use texture compression, but since transitions are fairly fast, that should not be a major issue. You only have to use an uncompressed lightmap during a short period of time.

BTW, I would also add that what we are doing is by no means new. Quake 2 already had support for multiple lightmaps per surface. They did it at a much finer granularity. Each brush face could have up to 4 different light “styles”. QRAD generated errors if more dynamic lights influenced the same face. That allowed them to have blinking lights or the ability to turn lights on and off. In Q2 dynamic objects never cast shadows, so they didn’t had to deal with dynamic occlusions.

Luiz: I am sticking to mostly tech updates for now. We’ll talk about the gameplay a little later!

Have you seen what Geomerics is doing? They have a system that provides real time quasi-GI. From what I can figure out, they’re doing something like this:

During scene creation and pre-computation:

1) Take a static scene as input, split it into patches a-la radiosity

2) Determine the contribution of reflected light from each patch to the incident light on every other patch. Just the data needed to do a radiosity bounce, no actual light has entered the scene yet.

In the real-time engine:

3) Add your dynamic lighting

4) Sample the dynamic lighting at the location of each patch sample

5) Do a radiosity bounce on the patches (Should be very fast since it’s all precomputed!)

It’s also storing “light probe” samples which are used to light any dynamic objects in the scene.

Yes, I considered licensing their stuff. However, ultimately it is overkill for what this game is doing (not much in the way of moving light sources) and is more expensive in terms of shader / texture resources.

If you really want to do live GI though it seems well worth looking into. Maybe I am biased because I am a Geometric Algebra fan.

Hey Jonathan- loved your last game and was excited to see something about your new project. I was just wondering when you are going to talk more about the concept of the game and gameplay. Looks great so far!

I don’t know if I will talk about the gameplay before the game is released.

Blogging about technical stuff actually serves a purpose (in terms of supporting the dialog, circulating ideas among the community of people working on this stuff). What good does talking about the gameplay do? How does it do anything but spoil the game for people who might play it later?

Hi Jonathan, im from brazil and first of all i wanna congratulate you about your game braid. I loved the gameplay, soundtrack and the puzzles. I’m a developer aswell and im starting in the game business. I wanna ask you something. Your games are all designed using Direct3D, right?

Would you advise people to use Direct3D instead of OpenGL? Why? Beucase opengl, supports other plataforms, such as linux and its free. That doesnt make a plus by itself? I know opengl used to be better then direct3d but i dont know in wich foot they stand today. Do you think that direct3d would be easier to develop? Or maybe, faster?

Congratulations for your success, looking forward to see some other games and posts.

The most important part is to make a good game. All this other stuff like D3D / OpenGL is just distraction. It doesn’t matter very much, because you can change those decisions later on pretty easily.

To say these games are “designed using Direct3D” is an extremely wrong phrase. The design of the game itself has very, very little to do with what graphics API is involved. On the tech side, there is a little bit more in the way of decisions made in relation to the specific API, but even there, it is a vast minority.

Just wanted to chime in that I’m also a fan of Geometric Algebra. Representation is half the problem (usually the harder half!).

Hey again Jonathan.

Yeah, I see what ya mean. Thats really interesting approach and makes sense… all the better when the game is eventually released!

So I have a random question: What games influence your concept/design and what games would you recommend playing, be they indie or studio made? I’m not a developer or anything, I just enjoy playing and appreciating well-made games and was interested in what you think!

Hi Jonathan,

Looking good!

I’ve been working on a GPU lightmap renderer for a while now so I thought I’d post. I started using a hemicube method similar to yours but recently I’ve moved to a depth peel method which I’ve found to be much faster. It was tough getting it up and running but well worth it. There’s more info on my site and the blog has the most recent stuff.

https://sites.google.com/site/copypastepixel/lighttool

and

https://sites.google.com/site/copypastepixel/blog

Interesting stuff Stefan! I suppose your implementation is based on the algorithm described in the GPU Gems 2 article, is that correct? I recently learned that the Ubisoft guys are using that algorithm as well (see their GDC’10 Splinter Cell presentation). Do you know of any other resources about this method?

The main reason why I went the more traditional hemicube approach is because it seemed much more straightforward to use a fairly standard method and it required very little changes to the engine.

Also, I wasn’t sure it would be possible to obtain production quality results with the depth peeling method, but it looks like you and others had success with it, so maybe my fears were unfounded.

I suspect the depth peeling method is a bit faster than ours, so I might experiment with that at a later point.

I just want to make sure I understand the depth peeling approach… if you’re computing the results for N sample locations and D sample directions, hemicube sampling requires rendering N times and computing D pixels for each (and in practice the hemicube rendering requires 5 scene renderings (4 if you use a hemi-octahedron).

In depth peeling, you’re computing the results for D sample directions at N sample locations by rendering D scenes, each with K pixels. Each scene rendering requires depth peeling which requires re-rendering the scene L times if the scene has depth complexity of L.

Does K need to be on the same order of N? Naively, looking at only one direction, it seems like it would have to be, or else you’re going to get worse results. Indeed, it seems like it has to be larger than N to account for the approximating nature (say, 1.5 or 2). However, that’s ignoring the depth peeling; you need N sample locations total but some of them will appear at different depths in the same pixel. So maybe you really only need K = N/L or so? Maybe K = 2N/L to compensate for the spatial approximation. But again, this is still only thinking about one direction. Maybe the sloppiness and inaccuracy of the “shadow map”iness doesn’t matter as much as you start averaging over multiple directions, because adjacent sample locations will map differently in the different directions and the artifacts will average out. So maybe K=N/L or even lower?

So, if we assume K=N/L, the math above is:

hemicube: 4N scenes, N*D pixels

depth peeling: L*D scenes, L*D*K pixels = N*D pixels

If pixel shading were dominant, then by this analysis the difference wouldn’t be that large. It’s a trivial pixel shader, so I suppose performance is more tied to vertex processing and setup, though?

The hemicube renderer can frustum cull and the 4N or 5N scenes only actually draw tha average object N or 1.2N or whatever times, so it boils down to rendering scenes N times versus L*D times.

Where you’re using 128*128 cube maps, that means your hemicube has 6*128*128/2 = 49,152 directions. I don’t have any clue what your average L is. 4? 20? Presumably your N is very high, but I don’t see anything in the blog to get a sense of the size of it. But e.g. 64MB of 16-bit lightmaps is 11 million sample locations.

So ballparking this as N = 10M, D = 50K, L=20, I get: hemicube = 50M scenes (10-15M scenes per object), and depth peeling = 1M scenes.

So assuming the math all makes sense, and assuming that ballparking is at all reasonable, there’s a factor of 50 different in the scene counts. Maybe that D is too high (that was your worst case, you also talk about using 32×32 and 64×64), and the L has absolutely no basis in reality.

A factor of 50 difference in scene counts is pretty significant, although as I said it depends how those compare to the pixel costs.

And if there were a significant difference in pixel counts I think maybe that would dominate anyway. As I noted hemicube is strictly N*D pixels, but there are two competing factors on the pixel counts with depth peeling, the loss of quality from using a shadow-map style technology (that may create a pressure to render at a higher resolution) and the hiding of the loss of quality from integrating over a large number of directions (that may make the spatial imprecision less important).

Does this make sense?

Sean, that makes sense, I’ve done a similar analysis and my conclusion is that at low resolutions (low N) the depth peeling method will most probably be significantly faster. In this case we are pretty much geometry limited, that is we are limited by the fixed function units of the GPU, while the shading units remain largely unused.

I’d like to see how the hemicube method performs on Fermi hardware. Fermi has a distributed geometry processors, so the primitive setup rate is no longer dictated solely by the core frequency, but also by the number of cores. There are some inefficiencies introduced due to the parallelization, but overall geometry performance should be much higher, at least on the high-end chips. However, I’m afraid that if the geometry bottleneck is removed, the CPU will become the bottleneck and the GPU will still be underfed.

An advantage of the hemicube method is that it’s possible to use irradiance caching, while the depth peeling method is more brute force. This reduces the number of samples significantly, how much depends on how much quality degradation are you willing to take. 8x produces only a minimal quality loss, 5x is almost indistinguishable from the original. On the other side this increases the CPU load since it’s now necessary to insert, lookup and interpolate irradiance records in an octree.

I haven’t really profiled the GI at high resolutions such as 128×128, but I expect GPU utilization to be much higher, so the analysis is not that obvious. N and L are scene dependent in the hemicube and depth peeling methods respectively, but in general, as your analysis seems to confirm, I expect the depth peeling method to still be faster.

That’s right, it’s based on the GPU Gems 2 article. I haven’t read the Splinter Cell presentation yet and I’m unaware of any other resources for this method. It always struck me as odd that there wasn’t more info around.

My development approach hasn’t been very scientific so I don’t have much by way of concrete figures. To start with I was using the hemicube method to calculate vertex colours. The largest scene I worked with contained about 90k vertices and it took about 15 minutes I think. That was the old school sponza scene which you can see on my site. After that I made the switch. So far depth peeling has worked well for me although I’ve only tried it on room sized scenes. I think that larger scenes with detailed interior and exterior elements may prove difficult to render in one pass.

I’ve updated my blog with a sunlit interior scene which may be of interest

http://sites.google.com/site/copypastepixel/blog

Also, I don’t know how much of a difference it makes but I’m using C# and XNA. C++ and straight DirectX may be faster.