Despite not even being finished, The Witness is already one of my all-time favorite games. I’ve been playing it since Jon started developing it several years ago, and I could not be more excited about its upcoming release.

Unlike Jon’s previous game Braid, the asset and programming scale for The Witness is much further toward the “triple-A” side of the scale than the “indie game” side. As anyone who works on these types of projects knows, the work required to successfully pull off a game goes up dramatically when you go that direction. The Witness already has several more people working on it than Braid did at its peak, but as with any project of this size, there are still many parts of the project that need more attention than the principals can afford to give them.

For this reason, it was always my intention to try to find some time to help out on The Witness when it came time to ship it. So this past Thanksgiving, Jon and I sat down and he went through a list of things in the codebase that he thought would benefit from the attention of an extra programmer. After considering the relative importance of the items, we decided that the player movement code was the place where improvements could have the biggest impact on the player’s experience.

Walkmonster in Wall

In the context of The Witness, the goal of player movement code is to be as unobtrusive as possible. The player is supposed to fully immerse themselves in an alternate reality, and every last detail is important to this experience. The last thing you ever want to happen is to have the player notice the fact that they’re sitting at a computer asking it to move a virtual viewpoint around.

For that reason, the player movement code has to be rock solid. If you can get stuck on edges, trapped in walls, fall through the floor, walk through a cliff, walk down a hill but not back up it, etc., it immediately breaks the immersion and reminds you that you are playing an artificial experience mitigated by an unreliable system. It can also lead to disastrous consequences for the player in some circumstances if there’s no way for them to recover from the problem with restarting or reloading a (possibly very old) save game. If you play games often, you’ve probably encountered one or more of these problems in shipping titles, and you know what I’m talking about.

Last week I began to work on this problem. I decided the first thing to do was to write a few integrated tools for working with the player movement code so we could analyze it and see how it was currently behaving. Immediately, when I opened up the project, I found myself with a familiar conundrum: what to name my first source file. This is always the most important part of any project (as Bob Pollard once said of band names and album names). If you have the right name for your source file, it’s smooth sailing from there. Pick the wrong name, and you might as well call the project off.

But what to call a system for bulletproofing player movement code? I’d never been asked to write code for that before. When I thought about it, I realized that I’d only personally seen evidence of similar code once before: when playing an early beta of Quake. There were some bugs with the monster placement, and in the console window, you could see error messages announcing the fact that the monsters, instead of spawning on the ground, had spawned partially intersecting with the level geometry. Each debug message began with the phrase “walkmonster in wall at. . .”

Bingo. It’s hard to ask for a better source file name than “walk_monster.cpp”. I was pretty sure, from that point forward, the code was going to come along nicely.

Drive Toward Point

When you’re trying to test a system, the most important thing to do is actually test the system. Even though that sounds like a straightforward rule, people writing tests often fail to observe it.

In this specific case, there are very easy ways to think that you were testing the player movement code without actually testing it. One example would be to do some kind of analysis on the collision volumes and walkable surfaces in the game, looking for small areas, gaps, etc. Once you’d eliminated all of these, you’d then proclaim the world safe to traverse and move on.

But this is testing the data, not the actual code. It’s still easily possible to have bugs in the movement code that result in bad behavior even with sanitized data.

To avoid this kind of trap, I wanted the testing system to remain as close as possible to what a human does to actually control player movement in the game. I started out by writing two routines to serve as the building blocks of this kind of testing.

The first is the closest to real human usage. It’s an update call that hooks into The Witness’s input processing and feeds it synthetic mouse and keyboard events. It can do some basic things that humans might do, such as looking around, walking toward a point, looking toward a point, and so on. It does these through nothing but emulation of human interaction with the mouse and keyboard, so I know that everything in The Witness’s input path will be running as it actually would during testing. I’ll talk more about this system and how it’s used in later installments.

The second piece of code is one step removed from that level. It’s a function called DriveTowardPoint that takes two points in the world and, calling the existing player collision system, attempts to move cleanly from one to the other. When it returns, it provides information about the attempt such as obstacles encountered and whether or not the destination was successfully reached.

This function is not quite as reliable as the synthetic input method of testing, because it eliminates a portion of the actual player movement system from testing. For example, any erroneous state associated with where the player is that the collision system might have built up won’t affect tests using this function. Nevertheless, I felt it valuable to have this level of testing available because it can much more rapidly test large areas, since it does not require the entire game loop to run, and thus can be employed much more frequently and over the entire world instead of just isolated test runs.

It’s also worth noting that there are no physics inputs to this function; there are no velocities specified for the starting point, for example. This is because The Witness is not an action game, so there are few stateful physical properties for the player. Players can’t jump, they can’t wall-run, they can’t enter bullet-time. Supporting these types of behaviors is possible with systems of the kind I’ll be discussing, but they add a layer of complexity that we don’t have to worry about on this project.

Anyhow, with DriveTowardPoint in place, it was possible for me to start on my first goal for the system: determining everywhere the player can go on the island of The Witness.

Rapidly Exploring Random Trees

Where can the player go? It seems like a simple question, but you’d be surprised how many games ship without the development team knowing the real answer. If possible, I want The Witness to be one of the few games where the team knows prior to shipping exactly where the player can and can’t go, no surprises.

This makes the problem statement, but perhaps not the problem, very simple: given a DriveTowardPoint function that faithfully determines whether a player could move in a straight line between two points, produce a coverage map showing everywhere the player could end up.

For reasons that I can’t necessarily explain, I had in my head, even before writing a line of code, that the best way to do this would be to use a Rapidly Exploring Random Tree. For those of you unfamiliar with this algorithm, it’s a very simple process whereby you record all the points you’ve visited along with a reference to the point from which you walked there. To add points to the tree, you pick a random target point anywhere in the world, select the closest point in your tree thus far, and try to get from that tree point to the target point. Wherever you end up, that’s a new sample point.

Normally, this is more of a pathfinding algorithm, so interleaved with random points, you repeatedly select some goal point as a target. This biases the exploration of the space toward the target, which is what you want when your only aim is to reach the goal. But in this case, I wanted to produce a complete map of everywhere the player could go, so I was exclusively using random samples.

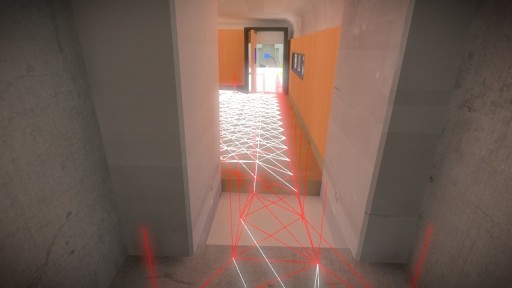

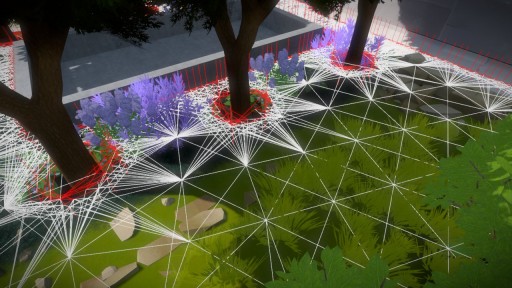

After implementing this (it is thankfully a very simple algorithm to write, so it doesn’t take long), I could see that it did do a reasonable job of exploring the space (the white etchings show paths that have been explored, and the red vertical lines show places where an obstacle was hit):

However, once I actually looked at how it behaved, it was clear to me that it wasn’t really the algorithm I wanted. For example, even after many iterations, it often barely explores rooms like this one, despite densely covering the area just outside, simply because it doesn’t pick enough random targets inside it:

If I’d thought about it before I started, I would have realized that the benefit of something like a Rapidly Exploring Random Tree is that it explores high-dimensional spaces efficiently. In fact that’s the primary reason you normally use it. But in The Witness, we don’t have a high-dimensional space. We have a two-dimensional space (distributed on an intricate manifold, yes, but a two-dimensional space nonetheless).

In this low-dimensional space, the benefits of a Rapidly Exploring Random Tree are muted, and the drawback is critically bad for my stated purpose: it’s designed to most efficiently find ways to connect pairs of points in a space, not to efficiently find all reachable points in that space. If you care about the latter, then Rapidly Exploring Random Trees will actually take an excruciatingly long time to do so.

So, I quickly realized that what I should be looking for is an algorithm that efficiently covers low-dimensional spaces in their entirety.

3D Flood Filling

Once I actually thought about the choice of algorithm, it seemed obvious that what I actually wanted was something like the old 2D flood fills we used to use to fill regions of a bitmap. Given a starting point, I just wanted to fill up the entire space, exhaustively probing every possible way you could go. Unfortunately, this is a lot less straightforward in the world of The Witness than it is in a 2D bitmap, for a number of reasons.

First, there’s no clear concept of finite connectivity around a point. Everything is continuous. It’s not like pixels where you can easily enumerate 4 possible places to go from every given location, and check each one in turn.

Second, there’s no fixed size for a locus in space like there is for a pixel in a bitmap. Walkable surfaces and obstacles can be anywhere, they have no minimum or maximum feature size, and no alignment to any external grid.

Third, although walking around in The Witness locally acts as if it were on a plane, the space itself actually is a deeply interconnected and varying manifold, with walkable regions directly above other regions (stacked perhaps several times over), and special connectivity that changes based on world states (such as doors that open, elevators that go up and down, etc.)

Given these complexities, it is very easy to think you have devised a way to do a flood fill, only to find after you’ve implemented it that it gets bogged down oversampling areas, misses important paths, produces erroneous connectivity information at places where the manifold is complex, or is simply too cumbersome to use because it must be restarted to cope with changes in the world state.

No good solution to all of this immediately came to mind, so I tried some simple experimentation. Using the Rapidly Exploring Random Tree code I had written, I changed the target point selection from random selection to a very controlled selection. Each time a new point was added to the tree, I would say that points a unit step along the cardinal directions from that point where to be considered future targets, just like a basic 2D flood fill.

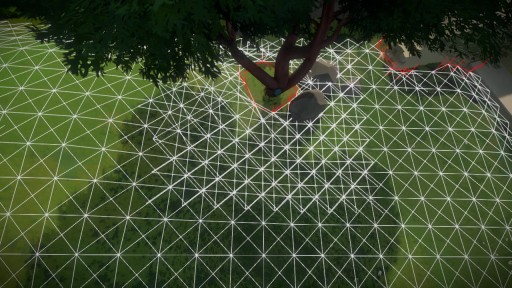

But of course, this would produce a useless sampling loop if I wasn’t careful. A point would branch out to a neighborhood of 8 points around it, but those 8 points would all then want to try the original point again, and this would go back and forth forever. So in addition to the controlled target selection, I also needed the simple constraint that any target which wasn’t some minimum useful distance away from an existing target point would not be considered. To my surprise, these two rather simple rules did produce a somewhat usable flood fill:

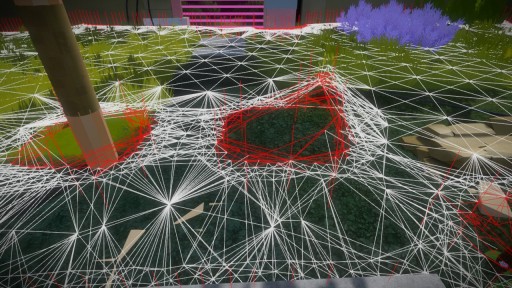

Not bad for just trying the obvious thing. But it suffers from what I’ve taken to calling “boundary echo”. You can see this effect in the following screenshot, taken while the map exploration was still in progress:

In regions with no obstacles, this algorithm proceeds nicely, sampling at a relatively even distance. But once it hits a boundary, the intersections there produce points that are “off grid”, in that they are not aligned along the sampling pattern with which the algorithm filled the adjacent open region. The reason “on grid” points don’t produce overly dense tessellation is because any new point that tries to step back onto one of the previous points finds the previous point there and declines to reconsider it. But when new points are produced on the boundary, they are completely unaligned, so there is nothing to stop them from stepping back into the already explored space. This creates a wave of offset sampling which will continue until it runs into a fortuitous line of points somewhere else that happen to be close enough to its advancing front for it to consider them coincident.

Although this may not seem like a huge problem, it is actually critical. The entire goal of an algorithm like this is to concentrate the samples in the areas where they are most likely to yield productive results. The more time you spend sampling and resampling wide open regions, the less time you spend mapping the actual edges of that region, which is the information you actually wanted. Because this is a continuous space and only an infinite number of samples can truly reveal its exact shape, your ratio of meaningful to meaningless samples is literally the measure of how effective your algorithm is at producing the walkable area.

Now, there is an easy fix for this specific problem: expand the distance at which two points are considered “close enough”. But by doing this to reduce sampling density in places you don’t care about, you lose sampling density in places you do care about, like around boundaries where you are trying to carefully probe for holes.

Localized Directional Sampling

Perhaps because I had started with a Rapidly Exploring Random Tree, my brain had been pigeonholed into thinking about proximity to the exclusion of everything else. The previous algorithms had all been about using proximity to do things, like figure out whether a new point should be considered, or which point to start from when trying to get to a new target point.

But after thinking about the problem for a while, I came to realize is that everything falls more neatly into place if you think in terms of directionality as well as proximity. While obvious in hindsight, if you’ve worked on these sorts of problems before, you know it’s easy to get trapped in one way of thinking for a while and not see the bigger picture, even if it turns out to be a simple one. That is precisely what had happened to me.

Once I shifted perspective, the correct sampling behavior was obvious. Each time I wanted to expand the exploration from a point, I would do a proximity query for a local neighborhood of existing nearby points. Instead of using the distance to those points to guide the exploration, however, I would categorize them by their direction (currently, I just use the eight cardinal directions, but I’d like to experiment with different kernels here).

In any direction where I don’t “see” a point, I walk a predetermined distance and add a point wherever I end up (whether I hit something or not). In any direction where I do see a point, I walk there and make sure I can get to it. If I can, I just add a visible edge, so it’s easy for the user to see that we’re connected. If I can’t, then I do add a new point at the collision, revealing the obstacle boundary.

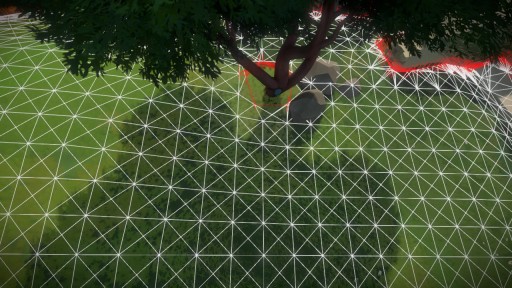

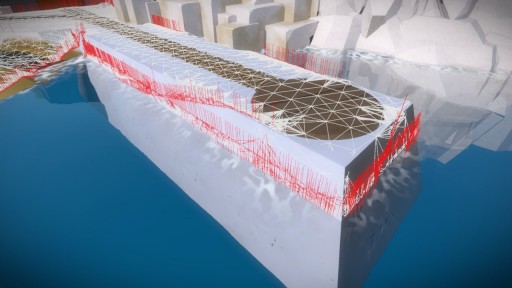

This sampling method works beautifully. It allows you to very accurately control the sampling with nicely tunable parameters, keeping all the points you want while preventing unnecessary tesselation, which leads to a very fast filling of the space:

Because it searches along directions rather than just using proximity, it is immune to boundary echo, and restricts oversampling to the boundaries where you actually want it:

Furthermore, it’s completely impervious to state changes or complex manifold problems. It deals strictly with points and those points can be anywhere, and you can add new ones at any time. If you’ve already made a map of an area with a door closed, and you open the door, all you have to do is plant a single new exploration point on the other side of the door and say “continue expanding this map”, and it’ll connect itself back up and explore the entire area beyond the door properly.

You can also change any of the core parameters at any time, and everything still works. Want to sample an area more densely? Just set the default spacing lower. You can do this in the middle of a map build, and it will start sampling more densely, with no need to jettison the existing results (which may have taken some time to produce).

Rudimentary Edge Probing

Although the algorithm by default will already sample boundaries more thoroughly, because intersections produce additional points beyond the sampling pattern, it didn’t necessarily probe them as thoroughly as I wanted, because it doesn’t do anything special when it encounters obstacles. It occurred to me that since I know which points were produced from collisions and which were not, whenever two collision points found themselves connected by an edge, I could choose to invoke extra sampling to try to find more boundary points nearby.

I haven’t yet worked on this extensively, but I put in a rudimentary method to test the theory, and it shows promise. For any two collision points connected by an edge, I step to the midpoint of the edge and probe outwards perpendicular to the edge. If I don’t collide with the boundary within a very short distance, I assume that the boundary is more complex, and add a new target there so the search will continue in that area.

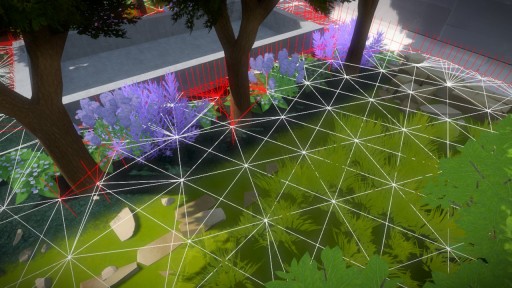

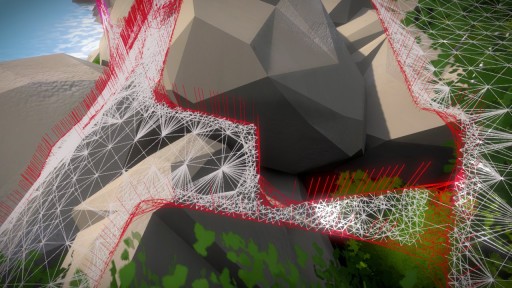

Even this simple scheme produces nicely dense sampling along the boundary, without any oversampling of open regions nearby. Here’s a region with several boundaries, but without edge probing:

Now here’s the same region with edge probing:

As pleased as I am with this, I’d be surprised if there weren’t significantly better algorithms for sampling the boundary, and I look forward to trying a few more methods in the future.

Early Victories

Even with little development time, and the resulting rather simplistic code, Walk Monster already produces very usable output that can find real problems in the game. Here are just a few examples of problems I’ve noticed in passing while developing the algorithm:

The slopes on the side of this extended platform should not have been walkable, but they were. This was because the player movement code has a pathologically bad way in which it deals with sloping geometry. I now know that’s in there, and I’ll be sure to fix it when the time comes to bulletproof it.

The Witness is meant to be a contemplative game, but wondering why there seemed to be a rock where there was no rock was not meant to be one of its koans. As you might guess, this was because someone left a collision volume in the game when the geometry it represented had already been removed. This is an easy thing to have happen, and it’s nice to have a tool that trivially reveals these mistakes quickly so people don’t have to worry about it.

These were supposed to be impenetrable rocks, but Walk Monster revealed them to be anything but. What’s worse, Walk Monster revealed that the path was somehow only traversible in one direction (from left to right in this top-down screenshot), which is never supposed to happen. I haven’t actually looked at this problem yet, besides verifying that I could actually do it via player motion (I could). It’ll be interesting to see what’s going on!

Open Questions

It’s encouraging to already have good results upon which we can build. Like I said, if you pick the right name for your source files, it all flows smoothly from there! But all of this work was done in the span of a few days, so it is obviously far from comprehensive and at lot of things are still completely ad hoc. If I have time to develop these systems further, there are a number of questions worth answering.

First, what kinds of post-processing on the data should I do to make it easiest to visualize? Drawing the raw network of points and edges can be very difficult for people to visually untangle, and some sort of better representation might make it possible for people to quickly understand more complex walkable areas at a glance.

Second, how can I improve the sampling patterns around boundaries to ensure that I find the most number of holes? Are there good methods for characterizing the ways in which shapes fall into a lattice, and are there good tessellation schemes that maximize the chances of intersecting and passing through these shapes?

Third, are regular sampling patterns or randomized sampling patterns better for filling spaces? I could easily modify the target point selection criteria to produce randomized patterns, but it’s not clear if this is worthwhile or, if it is, what kind of randomized patterns would be best.

Fourth, what other information would we like to get from our walkable area maps, now that we can build them? For example, it would be a straightforward extension of the existing system to do things like pathfinding or distance mapping, where a user could pick a point and ask for the shortest path between it and some other point, or to see a heat map of the distance between that point and all other points in the map. Are queries like this useful? What other queries might be?

For now, the walkable area visualizations we’re already getting from Walk Monster are more than enough to show us that the player movement code is pretty broken. I had planned to move on to creating a system for overnight testing via the user input method, but it seems clear that we already have enough problems to fix that there’s no need for that yet. So my next order of business, before improving these tools any further, will be to see what I can do to make the player movement code more robust. While I’m at it, I’d also like to see if I can get it an order of magnitude faster or two, because currently, the thing that slows down Walk Monster rather severely is the slow speed of the collision system.

With luck, I’ll report back soon in another installment on how that work is going.

This has been a fun and informative article to read, thanks for putting the time into writing it up!

“After considering the relative importance of the items, we decided that the player movement code was the place where improvements could have the biggest impact on the player’s experience.”

Thank you! Controls are so amazingly important these days! I’m glad to see you’re taking them seriously. :)

Thanks for publishing this. It’s cool how the walk monster was able to outline the invisible rock and uncover the other problems. It seems like it will be an important tool.

There are only 2 things hard in programming: cache invalidation, naming things and off by one errors. https://twitter.com/Jonathan_Blow/status/267836389956149248

Very interesting post! Thank you for giving insight into this kind of behind-the-scenes work!

Great article! I have to read it again, just to make sure I don’t lose more important information. One possible output of this would be to be able to click on several points along a path and have it generate your more accurate input method via keyboard and mouse if you start running into paths that are difficult to reproduce as a player.

Yes, definitely. Right now there hasn’t been any need for such things because there are blatant problems showing up that are trivial to reproduce. As we improve the collision system, and we get down to more subtler bugs, I suspect that we will need more assistance with repros like you say.

– Casey

Here’s algorithm that’s quicker to write for the people looking to do this on their own projects: First cover the entire world with a grid of squares of uniform size with the corners being the points being tested for reachability and the edges being the paths being tested for walkability. Check each edge, as well as the diagonals, for movement in both directions. Then, for each square that isn’t completely walkable, break it into four smaller squares by adding a node in the center. Keep subdividing until a minimum size threshold is reached. This is similar to a quad-tree. When your algorithm completes, go back and check for high level connectivity; islands of unreachable but walkable area are just asking for trouble.

As for parameters, make sure your starting grid size is smaller than the smallest collideable area. Also, run this test with the player being half the size you intend to use in the final game. The minimum subdivision threshold should be half of your test object size (so 1/4 player size).

This is not a good algorithm for a number of reasons. First, “cover the world with squares” is glossing over the main hard part of these types of algorithms. How do you propose to cover an arbitrary polygon-soup world with squares, exactly? Second, checking the world on a regular grid means that you will never find bugs in the collision system that don’t align to that grid. Third, subdividing and rewalking rectilinear edges doesn’t properly probe boundaries. You could have all sorts of thin-hole bugs along a boundary and never find them because you’re only ever stepping rectilinearly or diagonally to the grid on power-of-two boundaries.

Etc., etc.

The LDS algorithm I propose is actually much simpler to code than what you’re suggesting (it’s very few lines of code), doesn’t have these problems and handles the world covering problem implicitly, without needing to have a grid imposed for it. It also takes far fewer unnecessary samples since it does not need to use subdivided rectangles to probe what are inherently non-rectangular boundaries.

I’m not going to argue that my algorithm is better; it’s not. You find exact edges whereas mine does not. Yours handles polygon soup where mine assumes that you can’t walk up a steep slope and I did skip over handling the overlapping areas. Yours is more computationally efficient, mine throws CPU cycles at the problem. But I still think mine is simpler from the perspective that it doesn’t require as many IQ points from the programmer, which can make the difference between doing something and getting overwhelmed doing nothing. (It’s the same reason why oct-trees were more popular than BSP until common tools were released) I apologize for not being clearer.

None the less, my recommendation of testing with a smaller collision body will help on any algorithm that uses floating point. If you have a hole that’s exactly big enough you may or may not find it due to precision issues.

Keep up the good work.

Awesome post on many levels:

1. An interesting take on the specific problem.

2. It’s great to see your overall thought process tackling a fairly open-ended problem

3. It’s especially exciting to see the level of detail and care going into the game because I’m really excited to play it :)

Keep ’em coming!

Fascinating, thank you.

Wow!! Waiting to feel the player movement. This would really be an all-round experience!! :)

To have no disturbance of player getting stuck would be awesum!! The Witness will really make us “witness” an island’s beauty, the puzzles, the player’s movement and many other things. A true adventure game in every sense.

In that rock hindrance problem you said that the player was able to move up that rock instead of his movement being hindered… while you check if the next point is possible to reach can you also check if the midpoint is a path not possible to traverse and if it still is then is its one-fourth possible to be traversed and so on?? This will surely make the movement a lot slower but I just wanted to know if it will help in solving the problem or not.. :)

(This is what came into my mind first after reading the article)

The collision system internally already does (at least try to) do continuous collision detection, so in theory there should not be errors of the form that would be correct by successive refinement like you describe. However, I have not actually looked into this case yet, so I can’t say what the actual problem is or whether it would be solved by various fixes.

Hmm…. thats weird. :)

Thanks for this wonderful post! Was a very fun and informative read, I just hope I will be able to produce tools like this in the future, Lua is currently my first and only advance into the world of coding :)

Also thank you for putting such a great amount of time and effort into making sure the movement for The Witness is perfect. Movement bugs are probably the most annoying and distracting to be found in games, cannot wait to play :D

Does this mean you can do a dynamic version of “Myst-style” movement? Like, click the edges of the screen to rotate 60º, or click somewhere in the middle of the screen to walk there?

The movement in The Witness, at least on the PC, is all continuous, so you can walk wherever you want and look wherever you want, just like any other mondern first-person game. It does not restrict the player to clicking on edges of the screen to turn fixed amounts, or moving forward in specific amounts, etc., like older pre-rendered games did.

– Casey

Yeah, that makes sense, but I don’t think it would necessarily have to come across as a restriction (that just depends on the implementation!). A click-to-move system might be a nice homage to the prerendered times and it seems like there’s some room for really usable innovation there.

Obviously, many PC gamers will only accept WASD/mouselook, but others might actually prefer a simplified movement scheme to match the methodical, pensive nature of the game. It would certainly at least be appropriate for touchscreen platforms!

Anyway – I’m rambling now. As always, you guys are doing fantastic work; keep it up!

I’m going to let Jon comment on this, because I’m not sure what he wants to say publicly about the interface and so on. I was simply saying that from a _systems_ perspective, we need to be able to handle continuous movement and continuous look-around; that’s a core requirement. The interface design isn’t my purview so I can’t comment on that.

– Casey

There’s an interesting case (which I’m assuming the collision code covers) for places where the player’s collision volume collides with geometry above ground level (i.e., tree branches, overhanging ledges, etc). I only mention this because all of the screenshots you posted seem to focus on ground-level collisions.

In addition to finding orphaned collision volumes, you could use this to find objects that are lacking collision volumes. There are a couple of different ways you could do this but the simplest would probably be to see if there are any walkable points contained within a collidable object’s ground-level footprint.

Overall a very interesting article and certainly an enjoyable read. I look forward to your next post.

First, although the system is drawing the graph on the ground, it’s actually doing the full testing process to see whether or not the player could have walked that path. So off-ground things that would have prevented player motion are implicitly accounted for.

Second, I had the same thought for finding missing collision volumes, but unfortunately the way The Witness world works is not amenable to this. There are lots of actual objects that litter the ground which are not “collidable” objects, because it is considered to annoying for the player to have to navigate around them, so we’d rather just let you walk over them, etc. So the system can’t really automatically detect what should and should not have a collision volume, unfortunately.

There may be some metric I can employ that’s like “if the thing is a certain size” or something like that, and maybe I’ll try that at some point just because it might catch a few things, but unfortunately there’s no way to definitively make something that can detect all cases of a forgotten volume (at least not that I’ve thought of yet).

– Casey

I’m curious if you have already considered and rejected the standard navigation-mesh system (such as that used by the open-source library Recast). It might be a simpler approach to this problem, eliminating the need for a collision system at all.

First you rasterize your polygon soup into voxels (keeping track of the steepest slope at each voxel). Next, you use the slope and connectivity information to flag walkable voxels, and finally, you convert the contiguous walkable voxels into a navigation mesh. Now instead of dealing with moving a capsule through polygon soup, you are just moving a point on the surface of a connected triangle mesh!

I have not looked at Recast specifically, but now that I am starting to work on actually revising the collision system, I have been thinking about the best ways to change it into something workable. Some of that thinking is about whether or not preprocessing is a win, and what sort of preprocessing I might do. At the very least, I suspect I will do some sort of geometry processing to produce an analytic walk map so that I can compare it to the Walk Monster walk map and ensure that they coincide. Whether or not I actually do preprocessing to produce a new and different collision representation… it’s still too early to tell.

– Casey

I was thinking exactly the same thing that you.

Recast is a great solution to explore the walkable mesh of the world, but maybe I didn’t read carefully to understand the complexity of the problem and probably I am missing something :)

Anyway is always cool read about different approaches to solve problems, specially when the approach doesn’t give the expected results, it is great to experiment, thanks for the post :)

This is great stuff, and an excellent example of working smarter over working harder. AAA games usually deal with these errors by having lots of QA testers and designers comb over the walkable geo looking for “I got stuck here” bugs, and most big games ship with at least a few.

How much do you think the complexity of this solution would be multiplied by having a larger player state space, eg jumping, running vs walking vs crouch-walking, and other means of propulsion? The algo visualizes very nicely when it’s just a player walking, I’m curious as to if a jumping player would make the plot unreadable.

It depends how dimensional a space you want to have. At some point, RERT’s start being a win again, if you’re talking about systems where there’s many different configurations for the player (an example might be a game where you have multiple configurable limbs, ie., a giant robot game or something like that, where you had to consider the placement and orientation of the limbs as to whether or not the robot could move through various openings). So the flood-filling approaches in general will only scale up to some small number of dimensions, I think. But simple running and jumping probably aren’t very difficult to shoehorn in.

As for drawing things, well, that’s another story. I agree it might be tought to visualize the results of such a system even if it did work well. I haven’t thought much about that problem since we don’t have to solve it for this game :)

– Casey

Based on my experience with random walk on a console title with jumping, no, the plot is still readable.

This is an interesting read.

Thanks for the article.

I really like the Bubble Meshing algorithm described here: http://www.research.ibm.com/trl/projects/meshing/bubble/bubbleE.htm

Unfortunately, it might be patented.

Good post. I wrote a system once to perform the same task. My version was more of a “e-mail test lead to perform a full-game navmesh test” type solution though. I think the testers would like your system more. :)

I had what was labeled “triangles of doom”. They were navmesh triangles that were broken in some way. Couldn’t walk into, could walk into but not out, etc. There was a small variety and I hated them all. By the end of development the fixes were all data/level design side. When a new triangle of doom was found I would debug, learn how to detect, color code triangle in navmesh debug render mode, and automatically output all debug triangles.

You have two “bad” cases already – slopes and one way pathing. Both should be possible to programmatically detect and spew to console / debug output. They may get fixed by physics system bugs but eventually you’ll reach a point where all pathing bugs are due to bad data. Automatic detection and reporting of that is necessary.

So many commenters here have complimented you on your post, but not one of them has complimented you for being a GBV fan. You have good taste in music, sir.

Thanks for posting this!

Are you testing each edge to ensure you can walk both directions?

When you eventually do write your system for overnight testing via user input, may I suggest looking to Roomba for inspiration? Namely the wall following behavior.

Yes, the edges get tested in both directions. This is actually a natural consequence of the algorithm if you don’t take steps to prevent it; because each point attempts to connect with those around it, so long as you don’t intentionally prevent it from checking points to which it already has an edge, it will naturally traverse the reverse direction of edges incident on it.

Right now I do allow some edges _not_ to be tested in both directions, namely those that occur in oversampling regions. Right now the walk mapping time is dominated by the cost of calling the player motion code, so if/when I rewrite and optimize that, if it is significantly faster than it is now I will probably force reverse-direction testing on all edges.

– Casey

Very interesting.

Been there, done that from another angle, that is navmeshes for AI movement. I’ve seen the AI navmeshes being used for player movement in many games, so I dare to share few things I’ve learned.

You should definitely take a look at the Recast https://code.google.com/p/recastnavigation/

Test the demo (if using windows, I recommend to build it first), and take a look at the different debug options to see how the navmesh gets build. I made it so take my opinion with a grain of salt :)

While I was working on Recast I went though a bunch of sampling schemes and eventually ended up using voxelization as basis for walkable area detection. It was several orders of magnitude faster than anything else, and so robust that you can throw pretty much anything at it.

When using tiles the build process is embarrassingly parallel (each tile can be processed independently). That also allows you to do dirty rectangle type of incremental updates and what not. Since the process is quick, you can often afford to make a quite dense grid. Usually folks are using 20 or 30cm cell size, but in some cases where the navmesh is used for player movement people are going as dense as 5cm cell size.

I’ve often ran into debates on whether the navmes or physics should drive player movement. Physics and AI navigation are always just approximations, and there is usually really bad coupling between the two. The worst thing you can do is to make one of them to drive the other. I’m biased, but I would pick navmesh first and use physics to “plant” the character into the world (i.e. feet, walk height, etc).

I’m not sure I understand how voxels solve the same problem. I suppose if you are saying a priori that you don’t care what the _original_ definition of walkable was in the game, then sure. But if you want to know what the _game_ considers walkable, voxels don’t help you, because how do you know whether or not you can reach that voxel, or what the shape of the actual walkable area is inside the voxel?

But yes, I agree that if/when I start replacing the collision system itself, then something like rebuilding the walkable regions with voxels is on the table, since there I’m technically in control of what the definition of “walkable” is in the first place, so I do not have to use the definition induced by the game’s collision system. But this is a very different problem statement than determining what the walkable area is _for a given game system_.

Know what I mean?

– Casey

It is worth pointing out, also, that the appealingness of this kind of voxel approach is often inversely proportional to your standards of gameplay quality.

We want to make walking around feel good, while also ensuring that puzzles aren’t broken / etc. This often involves making collision volumes that don’t actually match visible geometry: say, we might bevel them in a certain way so that you bounce off them smoothly, or we might intentionally make them smaller than visible geometry in some cases and bigger in others.

The appeal of doing a voxelization of visible geometry is that everything happens for you magically and you can walk around. But the more stuff you actually want to hand-tweak, the less magical the automated thing is.

In other words, the idea of making a game where you can walk up any terrain unless the slope is X is fundamentally not what we want to do here. It is pretty far from what we are doing. What we are doing is more labor-intensive, but the belief is that it will yield better results for this kind of game.

(But yeah, as Casey implies, there is also an interpretation layer that happens via the code that affects the definition of what “walkable” is. We do a lot of hand-placement of collision volumes to set up this definition, but the code needs to extrapolate all the details, and there are lots of ways to do that.)

IF you’re thinking about possibly taking over the whole movement and collision thing for this, then you could also look at other kinds of automatic ground mesh generation.

e.g. at PathEngine we have a mesh generation pipeline based on BSP combination of source geometry, which is much more precise than voxels, and which then sounds like it could be quite a good fit for this.

You can choose exactly what geometry to pass into the automatic generation process, of course, so you’d pass in those bevelled / smoothed collision volumes instead of the world geometry components they are designed to replace, and the ground mesh coming out of the BSP combination would then reflect your bevelled or smoothed geometry appropriately.

I agree that choosing which steep areas should be impassable purely based on ground slope is not at all ideal, and in this case I’d recommend turning off maximum slope checking and instead flagging walkable areas explicitly in the source geometry (e.g. by painting flags into terrain data, or marking objects by type, or by instance, or keying this partly off of the applied texture, or whatever).

The BSP processing tends to be a lot more computationally expensive than voxel processing, but can be parallelised.

I totally understand what you’re saying. I was jumping too early to propose a silver bullet I have used myself :) But really I’d like to challenge you to think outside the box where the norm is that that physics system is _the_ way to implement player movement.

Any explicit navigation space is kind of a precomputed dual representation of your dynamic model. Being able to visualize it, is very powerful design tool and implied in the original post. Just like your physics collider approximates the player movement, so does the choice of “sampling” approximate your navigable space. There are no right solutions on either system, you only get a good enough compromise.

The way I have implemented the voxelization is that: first I use conservative voxelisation to detect solid space, then for each potentially walkable voxel I check if there is enough space for the character the stand there, and mark it as walkable. Finally, the walkable area is dilated by the walker radius. The end result is conservative estimation of space where the character can stand without colliding any geometry or falling off from cliffs.

Automatic walkable area detection (using voxels or otherwise) does not mean that you need to use the visible geometry to generate the walkable areas, you can use the same tweaks and tricks you’d do for physics colliders. I have found (RLE) voxels to be very flexible data format to do all kinds of tricks which are more costly otherwise (i.e. minkowski sums, morphological opening, closing, lowpass filtering, painting, surface types, etc). You can easily use volumes to paint the surface for special purposes (i.e. to disable walking, or mark water areas).

What I am saying is that your proposed solution does not seem to address any problem that we have in any way that we cannot already address… yet it introduces new problems via the sampling step.

This is characteristic of many tech-driven ideas: they claim to solve problems, but really don’t (or else they solve the small problems but leave the big ones). I used to be mainly a programmer who had tech-driven ideas. After a while I learned to see the way in which they so often go awry. It can be subtle if you are not used to seeing it.

This has nothing to do with “thinking outside the box” or whatever. I do plenty of that, believe it.

Casey, thanks for posting this! I’m amazed how you managed to explain this topic so well – I have never gone beyond Python programming for beginners or something, and yet this was really quite intriguing. Also, I study physics, and I thought a few of your explanations would make differential geometry a bit easier to stomach. Great job.

Also, thanks for writing about Windows 8 in the past, and for doing the Jeff and Casey Show – I enjoyed that a lot :) – and I actually found it via a link on the old Braid blog. Oh, and thanks for your work in the games industry.

A few brief suggestions for your open questions:

#1: For post-processing and data visualization, what about selecting a point, picking a colour depending on the proportion of white to red edges going from that point, then painting a polygon on the ground at that point. That polygon would always end at 1/2 of each edge.

So in a rough sense, you could have something like “green = trivial to traverse” to “red = cannot be traversed” or something.

I have no good idea what to do in border areas where edges cross each other. Perhaps my suggestion is just impractical.

#4: You might want to find out how long it takes to go from point of interest A (perhaps there’d be one at each of the several hundred puzzles) to interest point B? Although that sounds like the kind of optimization Jon Blow might dislike for being to Valve-y. I don’t know. At the very least, these numbers might give some additional ideas for optimal movement speed or world size.

Also, perhaps you could use your existing data to find bottlenecks? (As I understand it, The Witness is not about navigational puzzles whatsoever, so bottlenecks may be mostly undesirable.) I.e. if you go from point A along a random path to point B and come along point C, that’s a bottleneck to that one path, which is okay. But if most paths in an area go from point X to point Y and come along that same point C, that might be a problem – or an indication to make point C more interesting.

In any case, thanks for writing this.

Thanks for the kind words! Regarding the suggestions:

1) One of the problems with doing what you propose is that I don’t currently clean up edges that cross each other. That’s a problem just in general, and not a fault of your suggestion :) But it’s what would prevent that suggestion from looking clean without me first doing a pass to fix intersecting edges. But in the places where edges aren’t crossing too badly, then I suspect it would produce a reasonable mesh, yes – kind of the dual of the edges, if you will.

4a) Actually I do kind of like the idea of determining the length of time it takes to traverse parts of the island. I don’t know how Jon feels about it, but it does seem like a good idea to have some measurements like that just to understand how long it will take people to go between certain places they might want to.

4b) I’m not sure exactly what you mean by “bottlenecks”. Are you saying to create sort of a “chances that I will be in this location given random traversals” value for each vertex, and then you look at that to see where players tend to be, all other things being equal? That does seem like a useful idea, although as you suspected, I don’t know if Jon would be interested in this kind of data because at some point it would definitely cross into “metrics-driven game design” territory :)

– Casey

I wonder if you’d get faster results by doing a quake-style BSP tree over the whole thing (using collision geometry triangles as your splitting planes) to find convex regions, with connectivity. This does involve some computational geometry type fiddlyness (esp. with arbitrary, intersecting, geometry), but you should get a much sparser graph, with no chance of missing any tiny gaps (assuming you get the floating point math right!).

Then, verify that this connectivity is actually walkable, by traversing the tree and checking each “portal” (only ones with at least one walkable surface attached to it). Most of them should be trivial-accept (two large empty spaces connected by a large portal). For smaller portals you could do “proper” swept intersection type stuff, or just throw a bunch of random physics probes at it like you’re doing now, but at least you’re only doing it for the edge cases.

Very interesting! A bit beyond my capabilities, though.

I think the overhead views you demonstrated would be especially useful in identifying problem areas, if I understand the visualization.

Problems marked in yellow: http://img571.imageshack.us/img571/3064/thewitness.png

You could perhaps improve the visuals by drawing transparent filled cyan polygons between walkable points, and transparent bright-red polygons between wall points. Though that’d assume that everything between walkable points don’t have collision boxes that are so tiny they fit between your points. Perhaps you should also mark in bright yellow every collision box that is so small it’s likely to be missed by your sampling of the terrain?

I also don’t see in the last picture how you realized that you can walk one direction but not the other. I’m not noticing the visual signs that indicate that.

The post was already a bit long as it is, so some things got left out :) The truth is that you cannot tell that information from the _screenshot_, you can only tell that information when you actually watch it run. When it flood fills down the first side, it will not penetrate through, but when it finds its way around to the other side via another path, it then reconnects back up.

This is not actually how I will do this detection in the future, it was just an accident that happened during testing :) In the future I plan to actually draw edges that were only traversed in one direction, but I haven’t gotten to that yet.

– Casey

This would’ve been a great GD Magazine article

Seriously, who are you guys?

And you make money doing this stuff?

Sounds like a party to me. Get a real job! :P

Could this be the next Myst?

I’m hoping it’s going that direction, adding in a shooter style rather than P&C.

I’ve never seen such beautiful and unique scenery in a game before. I’m really looking forward to exploring this island.

Braid forever changed my taste for games.

I’m expecting The Witness to be even more interesting.

I hope you keep raising the game quality bar, so other will follow. Who knows, maybe some day. :)

I think this remains as one of my favorite posts. Still very curious how everything turned out.