Just before Christmas break, I fixed a bug in the transform manipulator and checked it in. Having a transform manipulator is a nice addition to any editor, and we were all happy to have it working. But shortly thereafter, I had to leave for a cross-country family Christmas trip. This meant no more real Witness work for about ten days, because my laptop only has Linux on it, and right now the Linux version of The Witness is still under construction.

This hiatus was the primary motivation behind writing my previous entry as a cliffhanger: although I had fixed the bug, I didn’t have time to adequately document the bug via debugger inspection, so it would have been hard to write anything meaningful showing how the bug manifested itself in practice. I wanted to wait until I had a chance to go through every last little bit of it, so I could be as specific as possible. In that sense, it was meant to be a bit of a cliffhanger for me as well.

But as it turned out, it was much more of a cliffhanger than I’d originally anticipated.

Good News, Bad News

The good news: congratulations to those of you who posted “floating point precision” as a suggestion for the cause of the bug. You aren’t any dumber than I am.

The bad news: we’re all dumber than we should be.

For those of you who don’t already know all about floating point precision problems, I’ll explain the problem we all thought we were solving. The original code, verbatim, looked like this:

bool ray_vs_plane(Vector3 *xpoint, Vector3 const &p0, Vector3 const &dir,

Plane3 const &plane) {

Vector3 n = plane.get_normal();

float denom = dot_product(n, dir);

float epsilon = 0.0001f;

if (fabs(denom) < epsilon) return false;

float t = - ((plane.d + dot_product(n, p0)) / denom);

if (t < 0) return false;

*xpoint = p0 + dir * t;

return true;

}

To fix the bug, I wrote a modified version to use for the transform manipulator:

bool ray_vs_plane(Vector3 *xpoint, Vector3 const &p0, Vector3 const &dir,

Vector3 const &plane_point, Vector3 const &plane_n)

{

Vector3 local_p0 = p0 - plane_point;

float denom = dot_product(plane_n, dir);

float epsilon = 0.0001f;

if (fabs(denom) < epsilon) return false;

float t = - (dot_product(plane_n, local_p0) / denom);

*xpoint = p0 + dir * t;

return true;

}

I’ve done exactly the same operation, but instead of using the d value of the plane equation to solve for t, I just move the ray itself to be centered around a point on the plane, so that the d value is implicitly zero. My reasoning was very simple: if I subtract two things that I know are close together (the camera location and the picked point on the manipulator), I will have less cancellation error than if I subtract two things that may be wildly different in scale (the d value and the dot product of the camera location and the plane normal).

This is pretty weak sauce as far as numerical precision improvements go. I am far from an expert on the subject (read some Forman Acton, for example, if you want to see how the pros roll). But, when I did this, it fixed the bug perfectly, so I assumed, at least for the moment, that it must be a reasonable fix.

But, honestly, I didn’t really have a good feeling about it.

Christmas Stew

Sometimes, when there’s a lot to do, you’ll live with a bug fix because it works, rather than because it makes sense. But invariably, at least for me, I end up going back to look more closely at something if I don’t feel like I fully understood it. I am sure this is mostly just an obsessive personality trait, but regardless, I rarely find the time to be wasted, because I almost always learn something from the process.

In this specific case, I didn’t have time to look into the problem in depth before I left. I planned to do so for the eventual writeup, but of course, I couldn’t do much without being able to run the code, and while I was on Christmas break, that wasn’t an option. Unfortunately, this meant that I had the entire Christmas break to stew about the bug that I wasn’t sure I’d really fixed properly.

From the beginning, the thing that didn’t sit right with me about the fix was that when Jon originally reported the problem to me, he didn’t mention anything about it happening more on the outskirts of the island than on the interior. Jon’s a very experienced programmer, so if something like that were happening, he would probably notice and include that in the report, because he’d know it would help me find the bug faster.

Instead, it seemed pretty clear that the bug was related more to the camera angle than the position in the world. While obviously the camera angle affects the input values, and could have a number of effects on the numerical precision of the program, it’s not intuitively the kind of thing that I would expect. If these sorts of algorithms were that sensitive in this way, I would have expected to encounter similar problems with camera-relative plane intersections many times before — but I never had.

The more I thought about that, the more it gnawed at me that I couldn’t go see exactly what was happening and prove to myself why the fix worked. I did have the game on my laptop, because I’d done some initial Linux porting work, so I realized that maybe, if I was lucky, I could reconstruct the problem without actually being able to load and run the editor.

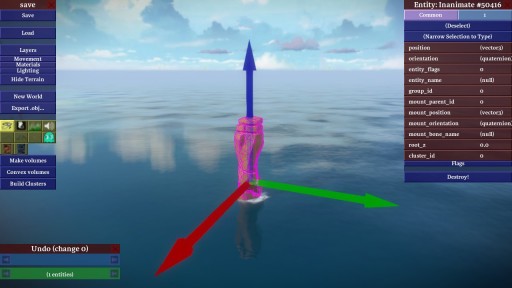

That is when I became very thankful that I had been writing these blog entries, because it meant I had stopped to take this screenshot before I left, specifically so I could do the writeup over break:

Do you see what I see? That lovely entity number up in the corner is pure gold for step one of reconstructing the problem. Because I could see the entity number, I was able to go into the repository and get the data file that says where that entity is placed in the world. That let me know the numbers of the center point of the manipulator: -304.114, 193.4923, 4.7582.

If you’re an experienced 3D programmer, then you probably are thinking the same thing that I was thinking when I saw those: how the hell could I be having numerical precision problems with such low numbers? The Witness’s scale is one unit per meter, which means that, at the scale of the dragging we’re talking about, there should be absolutely no problems with solving a single plane equation, regardless of how sloppily you did it.

But if that was the case, then how did I fix the bug? This lead me to step two of reproducing the problem: writing a test program. I wrote a little C program that would take numbers around that point and, for a large set of programmatically generated planes and rays, perform the intersection using both the old ray intersection routine and my “improved” one.

Perhaps unsurprisingly, these results reinforced my suspicions that there wasn’t enough of a difference between the two routines to account for the original bug. In all the tests, my “improved” routine was no more than one decimal digit more precise; so if the original routine produced intersection points that were actually, say, 0.0001 units away from the plane, the new routine might produce ones that were 0.00001 away.

Clearly, the fix just plain shouldn’t have worked. But it did.

Why?

The Wrong Part of the Post

I would love to be able to say that I guessed the real reason before stepping through the code, but I absolutely didn’t.

When I returned from Christmas break, I eagerly booted up the Witness and stepped through the code very carefully, keeping accurate track of all the numbers as I went, waiting to find that super-elusive numerical thing that I had overlooked. To my complete surprise, it turned out to have absolutely nothing to do with precision.

In fact, somehow, although I didn’t know it at the time, I actually did write about the root cause of the problem in my original post. It just wasn’t in the part that I thought. Indeed, rather than Always Look Behind You foretelling the eventual solution, it was Foreign Languages that contained the hint: due to my naivete with The Witness codebase, I misunderstood what it was doing with planes. It turns out that this one line from the original routine was the entire culprit:

Vector3 n = plane.get_normal();

You will notice that it only occurs in the buggy version of the ray intersection code. In my version, I couldn’t use the plane structure, because I didn’t want an “a, b, c, d” representation of the plane. I wanted “a, b, c, p”, if you will, where p is an actual 3D point on the plane. So I never called get_normal().

Why does this matter? Well, upon stepping through all the code, I realized that get_normal(), being true to its name, takes the unusual step of normalizing the a, b, c values of the plane before returning them to you as a vector. This means that the a, b, c values of the plane that you pass in when you create the plane aren’t the ones you get out.

That wouldn’t be a problem if it weren’t for the fact that, when you create one of these planes, it does not normalize the values you pass in:

Vector3 plane_n = cross_product(camera_back, translation_axis);

plane_n = cross_product(plane_n, translation_axis);

grabbing_plane = plane_from_point_and_normal(hit_pos, plane_n);

This is my fault on its face, because hey, it does say normal, and normals are traditionally supposed to be normalized. But in keeping with my reference to foreign languages, this is something I really just didn’t think about, because I am used to the way I’ve always done things with planes: nothing is normalized automatically, everything is just left unnormalized, because back in the day, normalization was very expensive and you never did it in a math routine unless it was absolutely necessary.

So plane_from_point_and_normal was actually doing what I thought it would: it just stored plane_n as the a, b, c values, and computed d with the inner product. But get_normal() was not doing what I thought it would. Rather than just returning me the vector {a,b,c}, it was normalizing them before giving them to me, which meant all my calculations with them would no longer agree with the d value computed in plane_from_point_and_normal.

And therein, clearly, lies the entirety of the bug.

Just to underscore how much of a native language issue this really is, when I showed Jon the preview of this article, he said, “If you are thinking of the plane as a geometric object, of course the normal is unit length, what else would it be?? But if you are thinking of it as some specific equation all the time, then it is natural to care about the a, b, c, you put in.” So I can only assume, were he using my math library, he would’ve been tripped up by the fact that I don’t normalize normals automatically, exactly the inverse of the problem I encountered with his.

I honestly don’t believe that this sort of thing is a case of us being presumptuous or sloppy; it’s just the price you have to pay for being able to program complex things at a reasonable speed. If we constantly have to think about what it means to work with a vector, or a plane, or a quaternion, we’re going to be a lot slower. It’s much more efficient to have a consistent perspective, and always rely on it.

And of course, the cost is bugs like this — but that’s a very small cost relative to the benefits.

Partial Credit

So, where does that leave you?

Clearly, automatic partial credit goes to everyone who wrote in suggesting that numerical precision was the problem. You came to the same conclusion I did with the information I knew at the time, which was all you had to go on. You certainly can’t be blamed for not finding the real bug since you would have needed the source code to the Witness math library to even see it. And it is true that the numerical precision of the routine could have been improved, as I believe I was able to verify separately with my test program.

But by the same token, nobody gets automatic full credit. There were a lot of reasons why, even with only the information in the original article, you could have said, “I don’t think it’s numerical precision, Muratori.” Maybe I am ascribing overly heroic attributes to the man, but I feel like Forman Acton would have known, from looking at the plane intersection snippet, that there wasn’t any way to lose enough precision in there to account for the bug as I described it, at least not in any way that would have been substantially improved by the suggested fixes people wrote in (or the fix I actually did, for that matter).

So in order to actually get full credit, you’re going to have to earn it, I think, but on the honor system. Ask yourself, if you had encountered this bug, and you had “fixed” it as I had, would you have gone back and figured out what was really happening before moving on to something else?

If you can honestly answer yes, then you get full credit, for this is about as good as you can hope to do as a working programmer, I think. You’re no Forman Acton, of course. . . but then again, who among us is?

I’m happy with my partial credit and learning the lesson: even if you fixed a bug, you better understand why it had happened before and why what you did fixes it. Thanks for writing!

p.s.: I love how clouds look in your game!

I don’t really understand all this stuff about normals, but it sounds like you were using it to find the point where the mouse intersected with the plain and it was giving you what from your perspective would be wrong values, perhaps in mapping the points on the plain to points on the screen. If this is the case then I’d like to congratulate myself for being 1/4 of the way there. My hunch would seem to have pointed me somewhat in the right direction even though I have no clue about this 3D math stuff.

I’m not sure whether or not I would have just accepted the fix or investigated it further. I guess it would have depended on my mood.

The major takeaway is, as it often is, to _always_ investigate further. For example, in this case, even though the bug was completely fixed by my original solution, the insight into how the plane code worked has surely saved me future Witness bugs of this same nature, because now I know how to use the plane code properly.

– Casey

Yes, I realize that. It still depends on my mood as to whether or not I would actually do it, regardless of what the reasons or benefits are.

Anyway, I’d like a layman’s explanation for what is happening so that I’ll know how close or far off the mark my guess was.

The problem is that I wrote my code expecting the plane class to store the plane equation {a, b, c, d} and then return it to me exactly as I stored it. So, if I computed (ax + by + cz + d) somewhere before storing the plane, then later asked for the values back so I could do (ax + by + cz + d) again, I need to be getting the same exact numbers back. Otherwise, obviously, the computations don’t match.

Since the Witness code was normalizing the a b c on the way out, my second (ax +by + cz + d) ended up using _differenct_ a b c values, which leads to completely erroneous results, all of which will be wrong by whatever the ratio was between the original vector {a, b, c} and the new normalized vector {a, b, c} / |{a, b, c}|.

It’s all just a simple misunderstanding about what the class did internally. Once I actually looked at its code, it was very clear what the problem was – it is not a subtle problem.

I’m not sure I understand exactly what your guess was, but it doesn’t sound close to either answer, unfortunately :(

Next time!

– Casey

Thank you for the explanation. Now what does normalization mean in this context?

It means adjusting the individual components of the vector such that the length of the line itself is 1.0 units. Since I don’t do this sort of programming much I’ll probably get the terminology wrong but hopefully the meaning is clear enough.

In 2D, a triangle with base 4 units and height 3 units has a hypotenuse of 5 units, from hyp^2 = base^2 + height^2 (which is Pythagoras’ theorem). The base and height are the X and Y components of a vector and the hypotenuse is the length of that vector.

To normalise the vector we divide each component in the vector by the length we calculate, giving us the normalised version of the vector.

For my example above, now we have 0.8 (4 / 5) for the base and 0.6 (3 / 5) for the height. So:

hyp^2 = base^2 + height^2

hyp^2 = 0.8^2 + 0.6^2

hyp^2 = 0.64 + 0.36

hyp^2 = 1.0, so hyp = 1.0.

You calculate the length of a 3D vector with a very similar equation: len^2 = x^2 + y^2 + z^2 and you can scale the components in the same way as I described up above. Normalised vectors simplifies a bunch of things in 3D math.

Well, I can claim in good faith that I didn’t respond to the original post because all your hints were pointing towards numerical precision, and from your statements about where the problem was it seemed like it could be hardly anything else, but that I just couldn’t see how the numbers involved were big enough for that to be the issue.

If the bug had been in front of me, would I have looked at it a second time after “fixing” it that way? I don’t think I can answer that, because I don’t think I would’ve tried to fix it that way without spending a lot of time staring at the actual numbers and trying to figure out where I was losing precision. But if I had explained it to someone I trusted, and they had said “oh, it’s numerical precision, try this” and they made the changes you did and then the problem went away… would I have looked a second time? Probably not.

I think a big part of being a good programmer is knowing when to go back and work through something, and when it’s good enough and you should work on something useful. Clearly, your mind nagged you into looking closer, and it was the right call.

But you could have easily had a similar situation where looking into it revealed that the problem was exactly what you thought, and you wasted hours of your life on something that meant nothing.

The takeaway here is not ‘always look into things’ but that good programmers have honed their instincts as to when to look into things or not.

There’s no way I’d have ever expected get_normal() to have a side effect on the object. Rename it to something like normalize_and_get_normal() ?

Where else might functions that look like accessors have side effects?

It does not have a side effect on the object. It just returns a unit vector.

Is there a set release date yet?

Not yet. As soon as _we_ know, we’ll let you know, don’t worry! :)

– Casey

I am thrilled that I get partial credit, as I still feel stupid about the simple programming mistakes I make as an inexperienced coder. I am glad that I was not completely correct though, because now I have learned an important lesson:

If you are working in an unfamiliar code base, never trust that a function does exactly what you expect it to. Always investigate.

It reminds me of how ashamed I was of my current coding style upon reading this:

http://kotaku.com/5975610/the-exceptional-beauty-of-doom-3s-source-code

Luckily for now, I’m still coding alone. So nobody has to decipher the meaning and purpose of my poorly designed functions. But I am glad to be learning, and have really been enjoying your write-ups, Casey. Particularly this one.

As an aside, I’d be a bit wary of that article on the Doom 3 source code.

– Casey

I had read that article at kotaku as well, but as a non-programmer I don’t have the knowledge to judge it’s legitimacy.

Why do you say you’re wary of it, Casey?

Essentially everything it mentions is not really interesting as an isolated example of “beauty”, or even good programming practice. Source code is beautiful at a much deeper level than the things they’re talking about in that article, and the beauty of the Doom 3 source code (if it is beautiful – I have no idea, I’ve never looked at it) surely has nothing to do with the things the person highlighted. I’ve seen very beautiful code that does none of those things, or actively contradicts many.

– Casey

To be honest, yeah, the whole article just seemed like it would add a bunch of extra work for minimal (sometimes none) aesthetic gain.

It’s highly subjective. Program in a consistent way, that’s more important.

Look up the common conventions for Python, Ruby and CoffeeScript and then tell me what you think about that article.

Can’t really ever bring up coding without getting into discussions about taste or relevancy of certain practices. It’s interesting food for thought regardless, as a young inexperienced programmer. Even if Doom 3’s source code is horrid, it’s certainly much better than mine.

My code is confusing and convoluted, doesn’t follow any conventions whatsoever and tends to lack a unifying structure that really feels well thought out. And that’s because it wasn’t. Sometimes you do just have to say Eff it to refactoring for the Nth time and just finish the game though. (Working on that one too.)

That makes sense. Have you looked into component-based entities?

https://archive.fosdem.org/2012/schedule/event/game_entities.html

I think the overall architecture of the project may be the most important part. I’m considering integrating FSMs into handling messages once they’re broadcast to entities, possibly in combination with a minimal-effort routing system via reflection.

Only partial credit for me! There is no way I wouldn’t have been satisfied with the original solution as you described it.

And hearing the real source of the problem, I don’t understand why the bug wasn’t more common.

BTW, I’ve been eagerly waiting for this follow-up and you did not disappoint!

I have to say all of your hard work is a big inspiration to me. I’m a budding software dev and aspiring game developer. I’m pretty young and new at it (21-years of age), and I’ve never really felt a sense of completion with any of my projects — which is what I would expect. It feels like there is always infinitely more things I can do to “improve” or add to a game. I’m sure you’ve run into this yourself, and I guess I’m just wondering if you do feel a strong sense of completion before you release the game, or if you look back and think “Oh, I could have improved this one part of Braid, if I had just waited”.

Oh and this might seem like completely off-topic, but this article just made me think of the “infinitely” many things to improve a game or its source code. A lot of software is “never done”, but games are not that type of software. At some point you have to declare it “done”.

My gut feeling was numerical precision, but for a bug like this I wouldn’t have tried any fix without stepping through the code in the debugger to see why it was going wrong, or looking at logs of the data (sometimes I’ll make a huge circular array and log in-memory).

If I was more math-savvy then maybe this would’ve fallen into my ‘trivial-enough’ category but even then I’m not sure about that. The lesson from this, in my opinion, is that you should always verify you have understood the defect properly BEFORE implementing any solution.

So… how would you prevent this from happening in future?

There’s the math and algorithm mistakes in programming, but these are usually easy for people with a computer science background to fix.

It’s the bugs made by naming conventions and assumptions that are easy to make and tough to find.

I remember spectacularly breaking a build because when I assumed a variable was called foo_binary was actually a binary value. Turns out people were using the high order bits to store “other” information.

WaitWaitWait…. “right now the Linux version of The Witness is still under construction” …

For us in the Linux gaming community, that’s hugely exciting! I (and many others) sadly thought that Jonathan had given up completely on it because of audio API problems and weak tools (well, weak for his requirements at least).

Should we have hope, maybe, that we’ll also be able to play this gorgeous adventure/puzzler?

Yeah, Casey was doing a Linux port. Partially so he could work on the game in his preferred environment! So, that is one way a Linux port could come about.